NESCALA 2013

Premature Optimization

Erik Osheim (@d6)

YMMV

This talk is informed by my experiences benchmarking and optimizing Scala while working on:

- GeoTrellis

- Spire

- Debox

- ...and at Precog.

Performance tuning is fiddly. It's hard to speak in generalities. I'm still learning.

What are we talking about?

This talk mainly covers low-level optimization.

What are we talking about?

This talk mainly covers low-level optimization.

The plan is to increase run-time performance by producing better bytecode for the JVM.

What are we talking about?

This talk mainly covers low-level optimization.

The plan is to increase run-time performance by producing better bytecode for the JVM.

(Specifically, better bytecode for HotSpot.)

How will we do this?

By focusing on how Scala code is written, and via profiling and benchmarking.

I like using Caliper and JProfiler. Other tools work too.

How else could you do this?

Of course, you can always do other things:

How else could you do this?

Of course, you can always do other things:

- Buy more (or bigger) machines

- Buy a better JVM

- Tune your existing app via JVM parameters

- Use JNI/JNA to call out to native code

- Use parallelism to apply more processors

How else could you do this?

Of course, you can always do other things:

- Buy more (or bigger) machines

- Buy a better JVM

- Tune your existing app via JVM parameters

- Use JNI/JNA to call out to native code

- Use parallelism to apply more processors

These are all good strategies. But they are not the focus of this talk.

Why is it so important to worry about HotSpot?

Why is it so important to worry about HotSpot?

- Inlining: small methods (<35 bytes) can be inlined

- Array range check elimination: speed up tight loops

- Faster virtual method calls (with 1 or 2 concrete methods)

- ...and much more!

Why is it so important to worry about HotSpot?

- Inlining: small methods (<35 bytes) can be inlined

- Array range check elimination: speed up tight loops

- Faster virtual method calls (with 1 or 2 concrete methods)

- ...and much more!

HotSpot does a ton of useful stuff! Check out HotSpot Internals.

What can foil HotSpot?

- Megamorphic dispatch: (2+ concrete methods for a virtual call)

- Exceeding 35 bytes for small/fast methods

- Error-checking and edge-cases in the "hot path"

- Complicated loop tests (e.g. method calls in while())

- Too much "manual inlining"

- Not enough warmup/method calls (e.g. hitting OSR)

- Too much bytecode ("CodeCache is full. Compiler has been disabled.")

How important is HotSpot? VERY IMPORTANT

(Proper Inlining can give a 10x speed up by itself.)

How do common Scala patterns interact with HotSpot?

The Good

Scala encourages lots of small methods, which inline well.

The JVM deals very well with composition.

Assumptions of immutability mean fewer defensive checks.

How do common Scala patterns interact with HotSpot?

The Bad

The biggest problem is garbage.

Scala is a litter-bug, and idiomatic Scala produces tons of garbage.

(But since Scala is so expressive, you don't see it unless you know where to look.)

How do common Scala patterns interact with HotSpot?

The Ugly

Scala produce lots of classfiles (potentially squeezing permgen/code cache).

Due to being more functional/expressive, the "inlining problem" hurts us more.

Why is Scala a litter-bug?

Boxing.

How many java.lang.Integer instances do you see?

val ns = Vector(1, 2, 3, 4, 5)

val squares = ns.map(n => n * n)

val sum = squares.foldLeft(0)(_ + _)

Why is Scala a litter-bug?

Collections API.

How many :: instances do you see?

def everySecondElem(ns: List[Int]) = ns.

zipWithIndex.

filter(_._2 % 2 == 1).

map(_._1)

(Also, how many iterations do you see? How many boxes?)

Why is Scala a litter-bug?

Short-lived immutable objects.

How many Tuple2 instances do you see?

def minAndMax(ns: Array[Int]): (Int, Int) = {

if (ns.isEmpty) sys.error("oh no!")

ns.foldLeft((ns(0), ns(0))) {

case ((amin, amax), a) =>

(min(amin, a), max(amax, a))

}

}

Similar things happen with Option.

Why is Scala a litter-bug?

By-name parameters and Functions.

def f(x: Int, y: => Int): Int =

if (x == 0) x else x * y

def g(ns: Array[Int]): Array[Int] =

ns.map(n => f(n, 9))

def h(as: Array[Int], bs: Array[Int]) =

as.map(a => bs.map(_ + a))

(I'm looking at scalaz.Semigroup[S]#append.)

Why is Scala a litter-bug?

Implicit Enrichment.

implicit class addTwice(n: Double) {

def twice = n * 2

}

def foo(ns: Array[Double]) =

ns.map(_.twice)

def bar(ns: Array[Double]) =

ms.map(n => if(n.isNaN) 0.0 else n)

(Look out for conversions to RichInt, RichDouble, etc.)

So I have to write Java?

So I have to write Java?

No!

Then what?

First, let's note that the JVM actually does fine with Scala most of the time.

Then what?

First, let's note that the JVM actually does fine with Scala most of the time.

Modern garbage collectors are optimized to deal with short-lived objects.

Doing a relatively inefficient thing a few times (usually) doesn't matter.

Then what?

Problems occur in situations where:

- We have O(n) (or worse) allocations.

- n gets really big.

So, we want the ratio of actual work to allocations to be high.

When is this?

Often developers will have a good intuition about which code is in a "critical path".

(But sometimes we make mistakes.)

That's why we have profilers (Jprofiler, YourKit, etc).

What about Knuth?

Donald Knuth cautions us about premature optimization:

We should forget about small efficiencies, say about 97% of the time: premature optimization is the root of all evil.

What about Knuth?

However, Knuth also says:

In established engineering disciplines a 12 % improvement, easily obtained, is never considered marginal and I believe the same viewpoint should prevail in software engineering.

What about Knuth?

Most of the techniques here provide (at least) 2x speed up.

But are they "easily obtained"?

Erik's "premature optimization" tips

- Avoid allocations in a tight loop.

- (Especially, avoid boxing in a tight loop.)

- Prefer arrays when possible.

- Avoid Scala collections API methods in a tight loop.

- Instead, write custom methods to do exactly what needs to be done.

- Prefer transient mutable context objects to repeated allocation.

- Use var (or better, @tailrec) rather than folding over tuples.

- Avoid mutable fields, field access. Prefer local variables.

- Pay attention to which classes are @specialized

Why do you hate functional programming?

I don't hate functional programming.

In fact, I think functional programming informs this style.

Why do you hate functional programming?

- Long-lived/shared data is precisely what should be immutable.

- With transient data it's not as big a deal.

- Methods using local vars can still be referentially transparent.

- It's all about minimizing side-effects and cross-talk.

This stye is useful precisely when you've been doing functional programming and find certain hot spots that need to be faster.

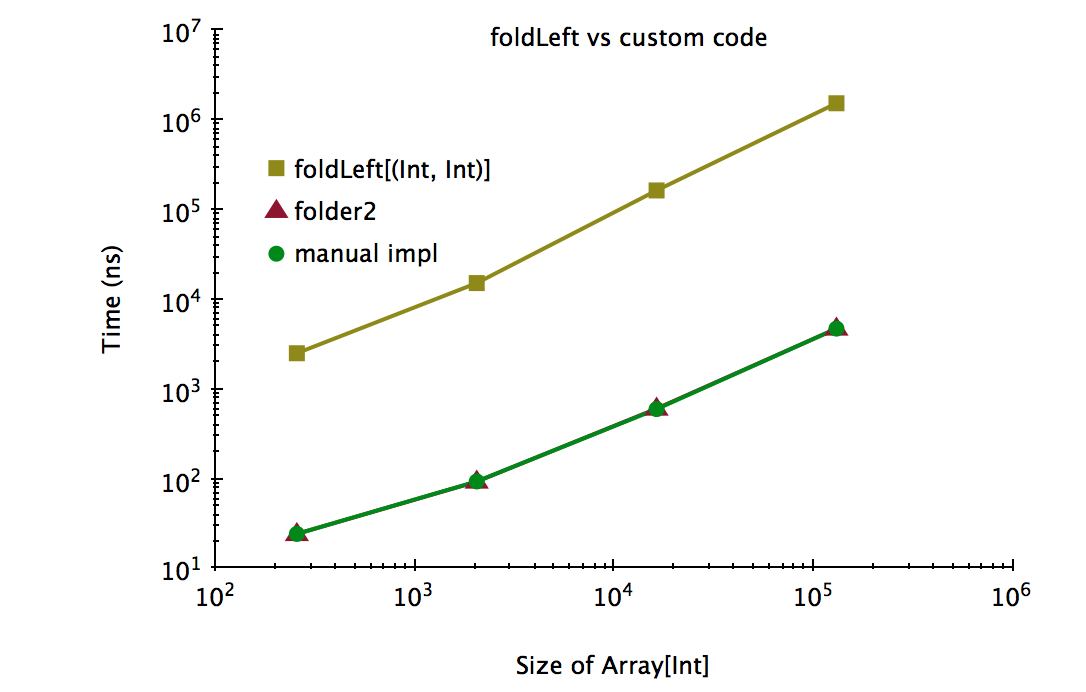

Case Study #1: foldLeft over Array[Int]

Let's reconsider some earlier example code:

val a = as(0)

as.foldLeft((a, a)) {

(tpl, a) =>

(min(tpl._1, a), max(tpl._2, a))

}

How can we do better?

Case Study #1: foldLeft over Array[Int]

Let's reconsider some earlier example code:

def folder2[@spec(Int) A, @spec(Int) B, @spec(Int) C]

(arr: Array[A])

(b: B, c: C)

(f1: (B, A) => B)

(f2: (C, A) => C):

(B, C) = { ... }

Case Study #1: foldLeft over Array[Int]

Let's reconsider some earlier example code:

def folder2[...](...): (B, C) = {

var state1 = b

var state2 = c

var i = 0

val len = arr.length

while (i < len) {

val a = arr(i)

state1 = f1(state1, a)

state2 = f2(state2, a)

i += 1

}

(state1, state2)

}

Case Study #1: foldLeft over Array[Int]

That looks moderately horrible. I hope it's worth it!

Case Study #1: foldLeft over Array[Int]

It is 100-330x faster.

Case Study #1: foldLeft over Array[Int]

For n=256, 100x means 25ns versus 2.4us.

For n=131072, 330x means 4.7us versus 1.5ms!!

@specialized?

Allows you to write generic code but (usually) avoid boxing.

Specialization is an incredibly subtle, difficult, and necessary tool.

If I was a character in a norse saga, specialization would be my tragic flaw

(the thing that shows up to kill me at Ragnarok).

@specialized?

Code you might write:

def mySum[@spec A:Ring](ns: List[A], total:A): A =

ns match {

case n :: ns => mySum(ns, Ring[A].plus(total, n))

case Nil => total

}

mySum[Int](ns, 0)

@specialized?

After specialization phrase (give-or-take):

def mySum$mIc$sp(ns: List[Int], total:Int)

(implicit ev:Ring$mcI$sp): Int =

ns match {

case n :: ns =>

mySum(

ns,

new RingOps$mcI$sp(total)(ev).plus$mcI$sp(n)

)

case Nil => total

}

mySum$mIc$sp(ns, 0)(Ring.IntIsRing)

@specialized: Too Many Dragons

There are a lot of gotchas:

- Need to inherit from traits, not classes

- Fields are duplicated in specialized variants

- Must specialize "all the way through" to direct types

- Methods specialize well, field access/constructors less well

- AnyRef + AnyVal don't mix well (RIP AnyRef specialization)

- Keep it simple! (Avoid inner classes, type members, fanciness)

- There are bugs so make sure to test (javap is your friend)

@specialized: Too Many Dragons

The best way to use specialized is with very simple classes/traits.

Basis only uses specialized traits and does "manual specialization" with codegen. This is not a bad idea.

The code will often end up being ugly. This is OK.

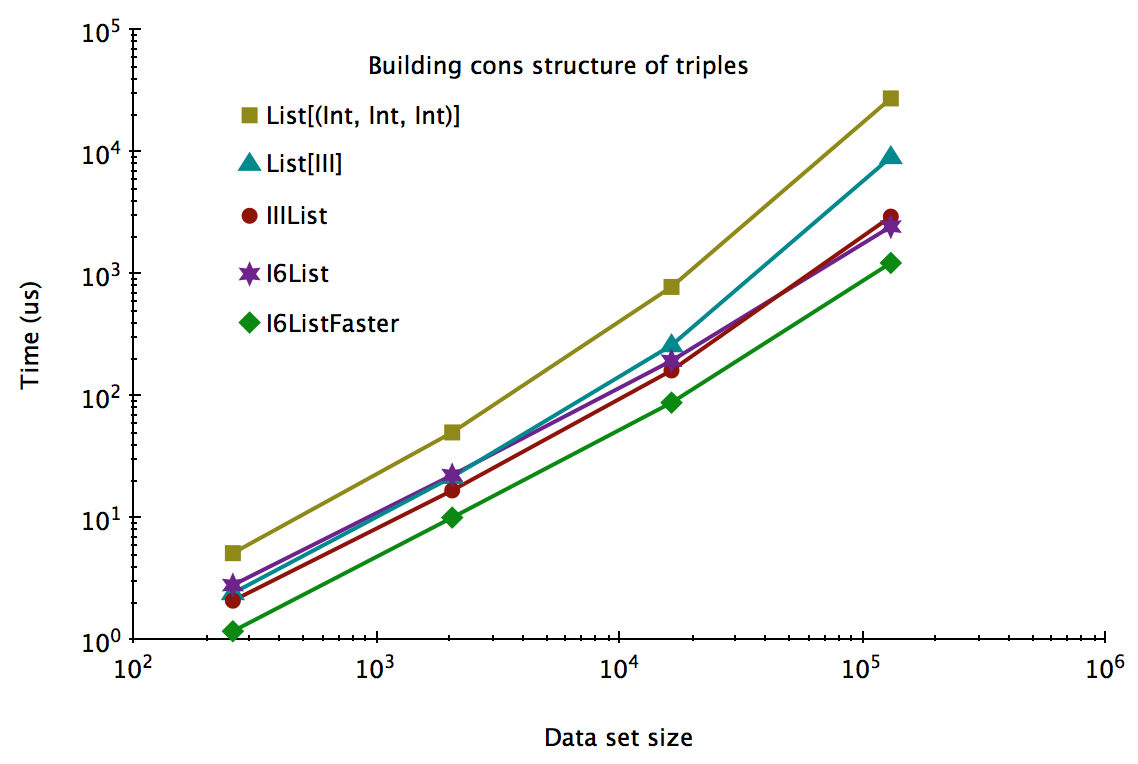

Case Study #2: building List[(Int, Int, Int)]

Sometimes List is really what you want (i.e. a LIFO)

How well can we do in these cases?

Case Study #2: building List[(Int, Int, Int)]

Given as, bs, and cs that are Array[Int]:

var i = 0

var d = List.empty[(Int, Int, Int)]

val n = as.length

while (i < n) {

d = (as(i), bs(i), cs(i)) :: d

i += 1

}

Case Study #2: building List[(Int, Int, Int)]

Let's not use Tuple3 (less boxing):

case class III(a: Int, b: Int, c: Int)

var i = 0

var d = List.empty[III]

val n = as.length

while (i < n) {

d = III(as(i), bs(i), cs(i)) :: d

i += 1

}

Case Study #2: building List[(Int, Int, Int)]

Can we have still fewer allocations? Sure!

sealed trait IIIList {

def a: Int

def b: Int

def c: Int

def tail: IIIList

}

case object IIINil extends IIIList {

def a = sys.error("die")

def b = sys.error("die")

def c = sys.error("die")

def tail = sys.error("die")

}

case class IIICons(a: Int, b: Int, c: Int, tail: IIIList) extends IIIList

Case Study #2: building List[(Int, Int, Int)]

Using the IIIList types still looks pretty similar to our List example.

var i = 0

var d: IIIList = IIINil

val n = as.length

while (i < n) {

d = IIICons(as(i), bs(i), cs(i), d)

i += 1

}

Case Study #2: building List[(Int, Int, Int)]

Can we do better still? Maybe (it depends).

sealed trait I6List {

def a1: Int

def a2: Int

def b1: Int

def b2: Int

def c1: Int

def c2: Int

def tail: I6List

def prepend(a: Int, b: Int, c: Int): I6List = I6Cons(a, b, c, this)

}

case object I6Nil extends I6List {

def a1: Int = sys.error("argh")

def a2: Int = sys.error("argh")

def b1: Int = sys.error("argh")

def b2: Int = sys.error("argh")

def c1: Int = sys.error("argh")

def c2: Int = sys.error("argh")

def tail: I6List = sys.error("argh")

}

case class I6Cons(a1: Int, b1: Int, c1: Int, tail: I6List) extends I6List {

def a2: Int = sys.error("argh")

def b2: Int = sys.error("argh")

def c2: Int = sys.error("argh")

override def prepend(a: Int, b: Int, c: Int): I6List = I6ConsCons(a, b, c, a1, b1, c1, tail)

}

case class I6ConsCons(a1: Int, b1: Int, c1: Int, a2: Int, b2: Int, c2: Int, tail: I6List) extends I6List

Case Study #2: building List[(Int, Int, Int)]

Things like I6List or Vector have:

- slower cons, uncons

- more complex structure

- better memory usage

Case Study #2: building List[(Int, Int, Int)]

So... what does all this look like?

Case Study #2: building List[(Int, Int, Int)]

For n=131072, we have:

- List[(Int, Int, Int)] takes 27.5ms.

- List[III] is 3.0x faster

- IIIList is 9.3x faster

- I6List is 11.2x faster

- I6ListFaster is 22.3x faster

(but it cheats!)

Of course, using an array would be even faster if we don't need a cons-like structure.

Cheating is Good

At a low-level, we'd like to be able to cut any corner we can.

Classical information-hiding (often considered best practice) makes this harder.

Typeclasses are a nice way mechanism to allow type-specific cheating.

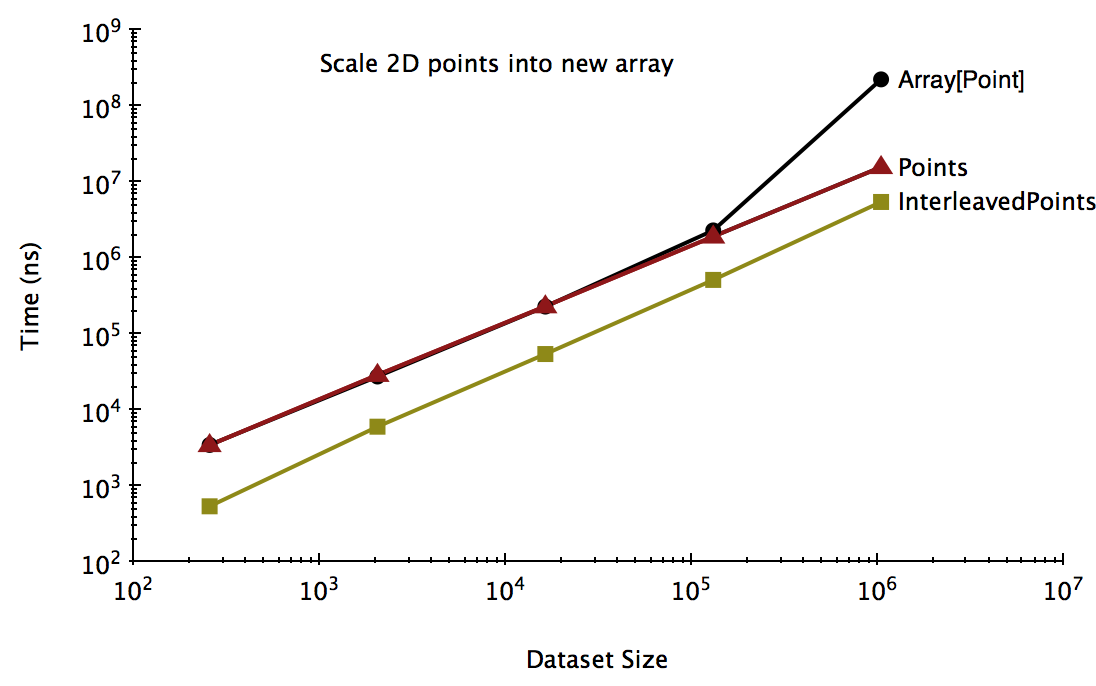

Case Study #3: processing 2D points

Eliminating boxing and allocations isn't always the best way to improve performance.

Cache behavior is also very important.

Case Study #3: processing 2D points

case class Point(x: Double, y: Double)

// naive

type PointArray = Array[Point]

// avoid allocating Point instances

case class Points(

xs: Array[Double],

ys: Array[Double]

)

// interleave X and Y coordinates

case class InterleavedPoints(

xs: Array[Double],

ys: Array[Double]

)

Case Study #3: processing 2D points

Let's perform a simple scaling operation:

// implemented for Array[Point]

val len = n

val ps2 = new Array[Point](len)

var i = 0

while (i < len) {

val p = ps(i)

val x = p.x

val y = p.y

val s = sqrt(x * x + y * y)

ps2(i) = Point(x / s, y / s)

i += 1

}

Case Study #3: processing 2D points

I'm going to skip the other two implementations, since they are similar.

Remember: InterleavedPoints will have a single array that's twice as long.

Case Study #3: processing 2D points

Results:

InterleavedPoints is 3-6x faster than Points.

At n=1M the Array[Point] is 14x slower than Points.

Case Study #3: processing 2D points

Lesson: good cache usage can be more significant than minimizing allocations.

The JVM is surprisingly good at dealing with 131,072 Point allocations.

Less so at dealing with 1,048,576 allocations.

Aren't arrays bad?

Arrays are mutable. It's a fact.

I like to consider arrays mutable only during a "construction phase". Since cloning arrays is actually quite fast, don't be afraid of making copies when it's reasonable.

(If you find yourself manually appending to an array with copying, stop!)

If you're hardline, consider a wrapper that only exposes Array#apply.

Aren't arrays bad?

For a good example of how to handle arrays well, look at the GeoTrellis project.

Arrays are assembled mutably, but considered immutable once they are shared/global.

Lots of high-level laziness, parallelism, etc with a very fast core.

(I helped build it while at Azavea. Hi guys!)

What about Vector?

For the kinds of problems being discussed, I don't think Vector is that great.

Works great for shared state, mid-to-high level code. Less useful at the low-level.

Effectively constant time access is still much slower than array access time.

Also boxing.

What about other Scala collections?

There are very few built-in Scala types that are specialized. When I checked they were:

- Function0, Function1, Function2

- Tuple1, Tuple2

- Future

- Range#foreach

It's safe to assume that any other generic types/methods you use will box.

Even though we have value classes, lots of implicit adaptors still allocate.

What about other Scala collections?

If you have a relatively precise idea of what you want, you can probably build data structures that are faster than the built-in ones.

Debox is my own exercise in this direction.

Arent you advocating NIH?

NIH ("Not Invented Here") is worth avoiding, but sometimes (re-) inventing things is how you make them faster.

You (or your team) are in the best decision to evaluate the trade-offs.

I tend to consider a 2x speed up "worth it" for the critical path.

Understanding the Ethos

You can find all the benchmarking code on Github. The examples use Caliper.

When doing this kind of experimenting and testing it's very important to:

- Present performance results in a context

- Impossible to be sure how fast things will run without testing

- Find a baseline (ideally many)

- Note software versions

(these benchmarks done on OSX, Scala 2.10, Java 7) - Test correctness!!!

Closing Remarks

- Be very reluctant to introduce shared mutable state

- Be very reluctant to give up on referential transparency

- Less code doesn't necessarily mean faster. Neither does more

- (On the JVM) modularity and reuse can be the enemy of speed

- Implementing the precise types/methods you need is worth it

- Try to think bottom-up: do only what is needed

- Consider your "allocation budget"

See Also

There is a huge wealth of JVM optimization lore out there. Here are some useful links:

- HotSpot internals is like a magical grimoire

- Hard-won wisdom from Twitter JVM configuration/profiling

- Cliff Click discusses the inlining problem

- John Rose discusses structs/values types/allocation

- "Java Performance" is an interesting book

- Cliff Click explains OSR

- Atlassian talks about the dreaded "Code Cache Is Full" message

The End